On 8th January 2016, Terraform v0.6.9 was released and amongst the new features was the addition of a +VMware vCloud Director provider. This was the result of a community contribution to the open source code base from some smart guys at +OpenCredo working for +HMRC . They had not only forked VMware's own govcloudair library creating the govcd library and made it work with the vCloud Director API, but had written a new Terraform provider utilising the new govcd library to manage and manipulate a number of resources in the vCloud ecosystem.

So following on from my look at Chef Provisioning, I thought I would take Terraform for a spin and see what it could do.

Terraform is purely an infrastructure orchestration tool. Sure it is cross-platform and multi-cloud-provider aware, but it makes no claims to be a Configuration Management tool, and it fact actively supports Chef as a provisioning tool which it happily hands over control to once the VMs have booted. Terraform is another tool from +HashiCorp , the same people that brought us Vagrant and Packer, and the quality of documentation on their website is first class. It is supported with downloadable binaries for Linux, Windows and Macs. The download is a ZIP file, so your first task is to unzip the contents somewhere suitable and then make sure the directory is added to your PATH environment variable so that the executables can be found. Terraform is a command-line only tool - there is no UI. It reads all files in the current directory (unless given an alternative directory to scan) with a *.tf file extension for it's configuration.

First thing to do then is to connect up to +Skyscape Cloud Services vCloud Director's API. Just like in Packer's configuration file, Terraform supports user variables in the configuration files, allowing the values to either be passed on the command line, or read from underlying environment variables. So as not to hard-code our user credential, lets start by defining a couple of variable and then use them it the vCloud Director credentials.

variable "vcd_org" {}

variable "vcd_userid" {}

variable "vcd_pass" {}

# Configure the VMware vCloud Director Provider

provider "vcd" {

user = "${var.vcd_userid}"

org = "${var.vcd_org}"

password = "${var.vcd_pass}"

url = "https://api.vcd.portal.skyscapecloud.com/api"

}

The values of these three variables can either be set by including on the Terraform command line --var vcd_org=1-2-33-456789 or you can create an environment variable of the same name but prefixed with TF_VAR_ - for example: SET TF_VAR_vcd_org=1-2-33-456789

Note that the environment variable name is case sensitive.

First infrastructure component that we'll want to create is a routed vDC Network on which we will create our VMs. This is supported using the "vcd_network" resource description.

variable "edge_gateway" { default = "Edge Gateway Name" }

variable "mgt_net_cidr" { default = "10.10.0.0/24" }

# Create our networks

resource "vcd_network" "mgt_net" {

name = "Management Network"

edge_gateway = "${var.edge_gateway}"

gateway = "${cidrhost(var.mgt_net_cidr, 1)}"

static_ip_pool {

start_address = "${cidrhost(var.mgt_net_cidr, 10)}"

end_address = "${cidrhost(var.mgt_net_cidr, 200)}"

}

}

Here we need to specify the name of the vShield Edge allocated to our vDC, giving it an IP address on our new network. We are also specifying an address range to use for our static IP Address pool. The resource description also supports setting up a DHCP pool, but more on this in a bit.

We are adding some intelligence into our configuration by making use of one of Terraform's built-in function called cidrhost() instead of hard-coding different IP addresses. This allow us to use a variable to pass in the CIDR subnet notation for the subnet to be used on this network, and from that subnet allocate the first address in the range for the gateway on the vShield Edge, and from the 10th to 200th address as our static pool.

Seems very straight forward so far. No how about spinning up a VM on our new subnet ? Well, similar to Fog's abstraction that we looked at with Chef Provisioning in my last post, Terraform currently only supports the creation of a single VM per vApp.

variable "catalog" { default = "DevOps" }

variable "vapp_template" { default = "centos71" }

variable "jumpbox_int_ip" { default = "10.10.0.100" }

# Jumpbox VM on the Management Network

resource "vcd_vapp" "jumpbox" {

name = "jump01"

catalog_name = "${var.catalog}"

template_name = "${var.vapp_template}"

memory = 512

cpus = 1

network_name = "${vcd_network.mgt_net.name}"

ip = "${var.jumpbox_int_ip}"

}

Notice here that we can cross-reference the network we have just created by referencing ${resource_type.resource_id.resource_property} - where mgt_net is the Terraform identifier we gave to our Network. By doing this it adds an implicit dependency into our configuration, ensuring that the network gets created first, before then creating the VM. More on Terraform's dependencies and how you can represent them graphically later. The IP address assigned to the VM has to be within the range allocated to the static pool for the network or you will get an error. For the catalog and vApp Template, we will use the centos71 template in our DevOps catalog that we created in my earlier blog post.

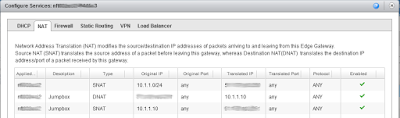

All very painless so far. Now to get access to our jumpbox server from the outside world. We need to open up the firewall rules on the vShield Edge to allow incoming SSH, and setup DNAT rules to forward to connection to our new VM.

variable "jumpbox_ext_ip" {}

# Inbound SSH to the Jumpbox server

resource "vcd_dnat" "jumpbox-ssh" {

edge_gateway = "${var.edge_gateway}"

external_ip = "${var.jumpbox_ext_ip}"

port = 22

internal_ip = "${var.jumpbox_int_ip}"

}

# SNAT Outbound traffic

resource "vcd_snat" "mgt-outbound" {

edge_gateway = "${var.edge_gateway}"

external_ip = "${var.jumpbox_ext_ip}"

internal_ip = "${var.mgt_net_cidr}"

}

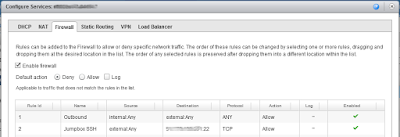

resource "vcd_firewall_rules" "mgt-fw" {

edge_gateway = "${var.edge_gateway}"

default_action = "drop"

rule {

description = "allow-jumpbox-ssh"

policy = "allow"

protocol = "tcp"

destination_port = "22"

destination_ip = "${var.jumpbox_ext_ip}"

source_port = "any"

source_ip = "any"

}

rule {

description = "allow-mgt-outbound"

policy = "allow"

protocol = "any"

destination_port = "any"

destination_ip = "any"

source_port = "any"

source_ip = "${var.mgt_net_cidr}"

}

}

And that should be enough to get our first VM up and be able to SSH into it. Now to give it a go. Terraform has a dry-run mode where it evaluates your configuration files, compares it to the current state and shows you what actions would be taken - useful if you need to document what changes need to be made in change request documentation.

> terraform plan --var jumpbox_ext_ip=aaa.bbb.ccc.ddd

Refreshing Terraform state prior to plan...

The Terraform execution plan has been generated and is shown below.

Resources are shown in alphabetical order for quick scanning. Green resources

will be created (or destroyed and then created if an existing resource

exists), yellow resources are being changed in-place, and red resources

will be destroyed.

Note: You didn't specify an "-out" parameter to save this plan, so when

"apply" is called, Terraform can't guarantee this is what will execute.

+ vcd_dnat.jumpbox-ssh

edge_gateway: "" => "Edge Gateway Name"

external_ip: "" => "aaa.bbb.ccc.ddd"

internal_ip: "" => "10.10.0.100"

port: "" => "22"

+ vcd_firewall_rules.website-fw

default_action: "" => "drop"

edge_gateway: "" => "Edge Gateway Name"

rule.#: "" => "2"

rule.0.description: "" => "allow-jumpbox-ssh"

rule.0.destination_ip: "" => "aaa.bbb.ccc.ddd"

rule.0.destination_port: "" => "22"

rule.0.id: "" => ""

rule.0.policy: "" => "allow"

rule.0.protocol: "" => "tcp"

rule.0.source_ip: "" => "any"

rule.0.source_port: "" => "any"

rule.1.description: "" => "allow-outbound"

rule.1.destination_ip: "" => "any"

rule.1.destination_port: "" => "any"

rule.1.id: "" => ""

rule.1.policy: "" => "allow"

rule.1.protocol: "" => "any"

rule.1.source_ip: "" => "10.10.0.0/24"

rule.1.source_port: "" => "any"

+ vcd_network.mgt_net

dns1: "" => "8.8.8.8"

dns2: "" => "8.8.4.4"

edge_gateway: "" => "Edge Gateway Name"

fence_mode: "" => "natRouted"

gateway: "" => "10.10.0.1"

href: "" => ""

name: "" => "Management Network"

netmask: "" => "255.255.255.0"

static_ip_pool.#: "" => "1"

static_ip_pool.241192955.end_address: "" => "10.10.0.200"

static_ip_pool.241192955.start_address: "" => "10.10.0.10"

+ vcd_snat.mgt-outbound

edge_gateway: "" => "Edge Gateway Name"

external_ip: "" => "aaa.bbb.ccc.ddd"

internal_ip: "" => "10.10.0.0/24"

+ vcd_vapp.jumpbox

catalog_name: "" => "DevOps"

cpus: "" => "1"

href: "" => ""

ip: "" => "10.10.0.100"

memory: "" => "512"

name: "" => "jump01"

network_name: "" => "Management Network"

power_on: "" => "1"

template_name: "" => "centos71"

Plan: 5 to add, 0 to change, 0 to destroy.

To go ahead and start the deployment, change the command line to "terraform apply" instead of "terraform plan". At this point, Terraform will go away and start making calls to the vCloud Director API to build out your infrastructure. Since there is an implicit dependency between the jumpbox VM and the mgt_net network it is attached to, Terraform will wait until the network has been created before launching the VM. No other dependencies have been applied at this point, so Terraform will apply the DNAT / SNAT / firewall rules concurrently.

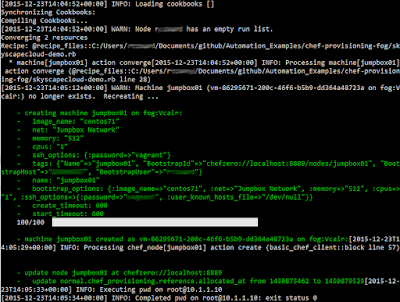

With the "terraform apply" command complete, we now have a new VM running in our VDC on its own network complete with firewall and NAT rules. Lets not stop there..... Although Terraform is not a configuration management tool, it can bootstrap and run a configuration management tool on the new VM after it has booted. It has built in support for bootstrapping Chef, and also a generic file transfer and remote-exec provisioner allowing you to run any script you like to bootstap your tool of choice. Lets take a look at using Chef to configure our new VM.

The Chef Provisioner can be added as part of the vcd_vapp resource definition, in which case before the resource is marked complete it will sequentially run the chef-client command on the VM, using either an SSH connection by default or WINRM if connecting to a windows server, and waiting for the results of the chef converge.

resource "vcd_vapp" "jumpbox" {

name = "jumpbox01"

catalog_name = "${var.catalog}"

template_name = "${var.vapp_template}"

memory = 512

cpus = 1

network_name = "${vcd_network.mgt_net.name}"

ip = "${var.jumpbox_int_ip}"

depends_on = [ "vcd_dnat.jumpbox-ssh", "vcd_firewall_rules.website-fw", "vcd_snat.website-outbound" ]

connection {

host = "${var.jumpbox_ext_ip}"

user = "${var.ssh_user}"

password = "${var.ssh_password}"

}

provisioner "chef" {

run_list = ["chef-client","chef-client::config","chef-client::delete_validation"]

node_name = "${vcd_vapp.jumpbox.name}"

server_url = "https://api.chef.io/organizations/${var.chef_organisation}"

validation_client_name = "skyscapecloud-validator"

validation_key = "${file("~/.chef/skyscapecloud-validator.pem")}"

version = "${var.chef_client_version}"

}

}

Alternatively, to give you more control over where in the resource dependency tree the provisioning steps are run, there is a special null_resource that can be used with the provisioner configurations.

resource "null_resource" "jumpbox" {

depends_on = [ "vcd_vapp.jumpbox" ]

connection {

host = "${var.jumpbox_ext_ip}"

user = "${var.ssh_user}"

password = "${var.ssh_password}"

}

provisioner "chef" {

run_list = ["chef-client","chef-client::config","chef-client::delete_validation"]

node_name = "${vcd_vapp.jumpbox.name}"

server_url = "https://api.chef.io/organizations/${var.chef_organisation}"

validation_client_name = "skyscapecloud-validator"

validation_key = "${file("~/.chef/skyscapecloud-validator.pem")}"

version = "${var.chef_client_version}"

}

}

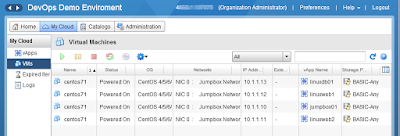

Now we have our jumpbox server built and configured, we can start adding in all the rest of our infrastructure. One really neat feature that Terraform has is the 'count' property on resources. This is useful when building out a cluster of identical servers, such as a web server farm. You can define a single vcd_vapp resource and set the count property to the size of your webfarm. Better still, if you use a variable for the value of the count property, you can create your own 'basic' auto-scaling functionality, taking advantage of Terraform's idempotency to create additional webserver instances when running 'terraform apply --var webserver_count=<bigger number>' to scale up, or to scale-down your webfarm by running 'terraform apply --var webserver_count=<smaller number>'.

Here is how I define my group of webservers that will sit behind the load-balancer.

resource "vcd_vapp" "webservers" {

name = "${format("web%02d", count.index + 1)}"

catalog_name = "${var.catalog}"

template_name = "${var.vapp_template}"

memory = 1024

cpus = 1

network_name = "${vcd_network.web_net.name}"

ip = "${cidrhost(var.web_net_cidr, count.index + 100)}"

count = "${var.webserver_count}"

depends_on = [ "vcd_vapp.jumpbox", "vcd_snat.website-outbound" ]

connection {

bastion_host = "${var.jumpbox_ext_ip}"

bastion_user = "${var.ssh_user}"

bastion_password = "${var.ssh_password}"

host = "${cidrhost(var.web_net_cidr, count.index + 100)}"

user = "${var.ssh_user}"

password = "${var.ssh_password}"

}

provisioner "chef" {

run_list = [ "chef-client", "chef-client::config", "chef-client::delete_validation", "my_web_app" ]

node_name = "${format("web%02d", count.index + 1)}"

server_url = "https://api.chef.io/organizations/${var.chef_organisation}"

validation_client_name = "${var.chef_organisation}-validator"

validation_key = "${file("~/.chef/${var.chef_organisation}-validator.pem")}"

version = "${var.chef_client_version}"

attributes {

"tags" = [ "webserver" ]

}

}

}

Note how we can use "${format("web%02d", count.index + 1)}" to dynamically create the VM name based on the incrementing count, as well as more use of the cidrhost() function to calculate the VM's IP Address. The counter starts at zero, so we add 1 to the count index to give us names 'web01', 'web02', etc. We can also use the jumpbox server's external address for the bastion_host in the connection details and Terraform will ssh relay through the jumpbox server to reach the internal address of the webservers.

The full configuration for Terraform can be downloaded from Skyscape's GitHub repository. In addition to the jumpbox and webservers covered above, it also includes a database server and a load-balancer, along with sample Chef configuration to register against a Chef Server and deploy a set of cookbooks to configure the servers. I won't go into the Chef Server configuration here, but take a look at the README.md file on GitHub for some pointers.

The only issue I have with Terraform so far is that it does not make the call to VMWare's Guest Customisation to set the VM hostname to match the vApp name and the VM name as displayed in vCloud Director. When the VMs boot up, they have the same hostname as the VM that was cloned from the vApp Template used. This is not a big issue, since it is trivial to use Chef or any other configuration management tool to set the hostname as required.

The full configuration for Terraform can be downloaded from Skyscape's GitHub repository. In addition to the jumpbox and webservers covered above, it also includes a database server and a load-balancer, along with sample Chef configuration to register against a Chef Server and deploy a set of cookbooks to configure the servers. I won't go into the Chef Server configuration here, but take a look at the README.md file on GitHub for some pointers.

Gotchas ?

The only issue I have with Terraform so far is that it does not make the call to VMWare's Guest Customisation to set the VM hostname to match the vApp name and the VM name as displayed in vCloud Director. When the VMs boot up, they have the same hostname as the VM that was cloned from the vApp Template used. This is not a big issue, since it is trivial to use Chef or any other configuration management tool to set the hostname as required.

In Summary

It rocked. Having abandoned plans to use the vShield Edge load-balancer capability in favour of our own Haproxy installation, the Terraform configuration in GitHub will create a complete 3-Tier Web Application from scratch, complete with network isolation across multiple networks, a load-balancer, webserver farm and back-end database server. Chef provisions all the software and server configuration needed to just point my web browser at the external IP address at the end of the Terraform run and have it start serving my web pages.

Let's check off my test criteria:

| Criteria | Pass / Fail | Comments |

|---|---|---|

| Website hosted in a vDC |  | vDC / Networks / NAT / Firewall rules all managed by Terraform. |

| Deploy 2 Webservers behind a load-balancer |  | The webservers were created and web app deployed. The Haproxy load-balancer was dynamically configured to use all the webservers in the farm, independent of the webserver_count value used to size the webfarm. |

| Deploy a database server |  | The database server was deployed correctly, and the db config was inserted into the webservers allowing them to connect. |

| Deploy a jumpbox server |  | The server was deployed correctly and all the NAT rules were managed by Terraform. |

Overall then, a resounding success. The Terraform vCloud Director provider is still very young, and is not as feature rich as some of the other providers for other cloud vendors. That said, it is an open source project and the developers from OpenCredo are happy to collaborate on new features or accept pull requests from other contributors. I am already pulling together a list of feature enhancements that I plan to contribute to the code base.

Terraform also has many more features than I've got time to mention in this post. In addition to VM provisioning, it also has several providers to support various resources from DNS records to SQL databases. It also has a concept of code re-use in the form of modules, and storing it's state information on remote servers to allow teams of users to collaborate on the same infrastructure.

For end-to-end integration into your deploy pipeline, it also combines with Hashicorp's Atlas cloud service, giving you a complete framework and UI for linking your GitHub repository together with automated 'plans' when raising pull requests to validate configuration changes, feeding back the results to the PR, and an authorisation framework for automatically deploying merged changes.